End-to-End API Testing: How Generative AI Is Revolutionizing Communication Platforms

End-to-End Testing for Communication APIs: Where Generative AI Shines

In today's digitally connected world, communication APIs serve as the lifeline of modern platforms. Whether it's transactional emails, SMS notifications, push alerts, or in-app messages, APIs are responsible for delivering millions of messages across channels in real-time. The stakes are high—any disruption in the flow can lead to failed transactions, customer dissatisfaction, or lost revenue.

As companies scale, ensuring that these APIs work seamlessly under various conditions becomes increasingly complex. Traditional testing methods often fall short when it comes to handling the scale, variability, and interdependencies of full message flows. This is where generative AI in software testing is stepping in, redefining how QA teams approach end-to-end validation for communication APIs.

This article explores how generative AI is revolutionizing API testing—automating test creation, detecting anomalies, and ensuring message reliability across complex workflows.

Understanding the Complexity of Communication APIs

Communication APIs—like those powering platforms such as SendBridge—serve dynamic, high-throughput use cases. These include:

-

Transactional messages: Password resets, order confirmations, OTPs.

-

Marketing campaigns: Emails or SMS blasts personalized for users.

-

System alerts: Notifications for system events or user actions.

-

Multi-channel delivery: SMS, email, push, and webhooks.

Each message goes through multiple stages: request processing, content rendering, delivery queuing, third-party gateway interaction, response parsing, and success/failure callbacks. Minor bugs in any of these stages can derail the entire communication process.

Testing these interactions in isolation is insufficient. What's needed is true end-to-end testing that can simulate real-world flows across all API layers and external integrations—something traditional methods struggle to scale.

What Is Generative AI in Software Testing?

Generative AI refers to a class of artificial intelligence models designed to generate new outputs—such as text, images, code, or simulations—based on patterns learned from large datasets. These models don't simply retrieve information; they create novel content by identifying relationships and extrapolating from existing data.

In the realm of Quality Assurance (QA) and software testing, generative AI represents a transformative advancement. Instead of relying on manually written test scripts or rigid automation frameworks, generative AI in software testing introduces systems that can:

-

Automatically generate comprehensive test cases: These AI systems can scan application workflows, user journeys, and business rules to create test cases that cover not only common scenarios but also rare and complex edge cases. This results in higher test coverage and faster detection of issues.

-

Predict edge cases and failure paths: Generative models can evaluate previous failures, logs, and usage patterns to determine which inputs or sequences are likely to break the system. This predictive capability allows testers to proactively assess areas of high risk.

-

Self-heal tests as APIs evolve: When changes are made to the API structure, request payloads, or UI layout, traditional test scripts often break. Generative AI tools can automatically adjust test steps, element locators, and validations—maintaining functionality without manual rework.

-

Adapt to system behavior using machine learning: These tools learn how a system behaves in various states—normal, degraded, overloaded, etc.—and generate adaptive test scenarios accordingly. They become more effective over time as they encounter new patterns and system responses.

These AI-powered capabilities are not hard-coded or rule-based. Instead, they're powered by deep learning models trained on vast amounts of QA data, user interactions, and production logs. By analyzing this information, the AI forms a contextual understanding of how the application functions and what kinds of issues may arise.

For example, in testing a messaging API, a generative AI tool might learn that a specific type of payload leads to a delay in delivery or that certain user agents consistently receive malformed responses. It can then automatically generate and run tests targeting these weak points—without requiring a human tester to identify them.

Another advantage is that these tools work across layers—UI, API, backend, and third-party integrations. They can simulate real-world user behavior (like resending a failed email or switching delivery channels when a server is down), ensuring the entire flow is validated holistically.

In summary, generative AI in software testing is a significant leap from traditional testing methods. It not only accelerates test creation and execution but also enhances quality, resilience, and scalability. These systems think more like testers, act faster than humans, and continuously improve with each run—making them an indispensable part of modern QA strategy

Why Traditional API Testing Isn't Enough

Most legacy testing approaches for APIs rely on hardcoded requests, assertions, and test suites. These methods have several limitations:

-

High maintenance cost: Any change in the API schema or workflow requires manual updates.

-

Low test coverage: It's difficult to cover all user flows, content variations, and error conditions.

-

Limited scalability: Creating end-to-end tests for hundreds of message types is time-consuming.

-

Static logic: Traditional tools cannot adapt to changing payloads or third-party responses.

This makes it difficult for QA teams to keep up with rapid feature releases and message personalization logic. What's needed is dynamic, AI-powered testing that evolves with the product.

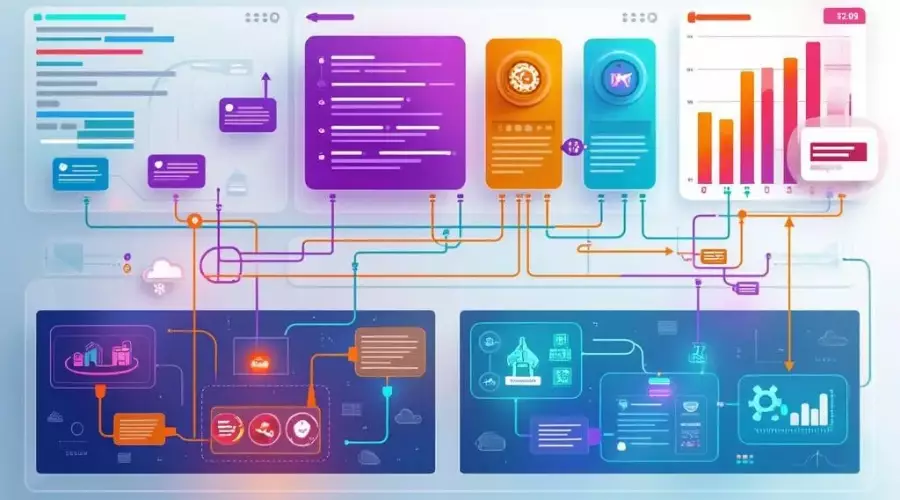

Where Generative AI Excels in End-to-End Testing

Generative AI doesn't just automate—it intelligently generates. Here's what sets it apart:

-

Dynamic test creation: Based on user behavior, payload data, and historical test results.

-

Risk-based prioritization: Focuses test coverage on high-impact areas such as delivery failures or corrupted content.

-

Smart assertions: Learns expected values and formats based on real-world outcomes, reducing false positives.

-

Adaptive execution: Adjusts test flows in real time depending on conditions such as rate limits, delayed responses, or gateway errors.

With these capabilities, QA teams can move from script-heavy manual testing to intelligent, scalable validation of every layer in the messaging pipeline.

Message Flow Testing: Generating Test Scenarios at Scale

Consider a real-world messaging workflow:

-

A user places an order on an e-commerce site.

-

The API triggers an order confirmation email.

-

The email contains personalized content (user name, order ID).

-

The delivery system queues and sends the email.

-

A third-party SMTP service returns a delivery status.

-

The system logs success and triggers a push notification.

Testing this entire flow manually is cumbersome. With generative AI, tools can:

-

Generate test flows for every combination of user type, message content, and status.

-

Validate content personalization against real user data.

-

Simulate gateway responses like soft bounces, hard bounces, throttling, or spam rejections.

-

Ensure logging and alerts fire appropriately.

This level of test generation—previously impossible to scale manually—is now achievable with AI.

Testing User Notifications and Delivery Logic

User-facing communication is more than just an API request—it's about delivering the right message to the right user at the right time. Generative AI helps test:

-

Time-based triggers: Daily summaries, scheduled messages.

-

Conditional logic: If-else branches based on user activity or preferences.

-

Multilingual content: Ensures translations are correct and properly rendered.

-

Format validation: Testing across email clients, mobile devices, or push platforms.

By generating these tests automatically, AI enables QA teams to simulate real customer journeys with minimal human input.

Advanced Error Handling and Recovery Validation

No communication system is immune to failure. APIs might timeout, gateways may reject requests, and users might go offline.

Generative AI testing tools can simulate these conditions by:

-

Mimicking network errors or slow responses.

-

Triggering third-party API outages.

-

Testing retry and failover mechanisms.

-

Verifying that fallback messages (like SMS after email failure) are sent.

This ensures that systems behave gracefully under pressure—maintaining trust and performance even during edge-case scenarios.

Real-World Use Cases: Messaging Platforms and AI QA

Companies using platforms like SendBridge can benefit from generative AI in the following ways:

-

API contract testing: AI can auto-validate schema changes and ensure backward compatibility.

-

Load simulation: Tools can generate concurrent test flows to mimic production traffic.

-

Personalization QA: Validate thousands of unique messages with dynamic placeholders.

-

Regression testing: Catch bugs introduced by new features or content templates.

These AI-driven capabilities bring new confidence to development and release cycles, especially in high-stakes communication systems.

How testRigor Simplifies API Testing with Generative AI

testRigor is a leading platform that leverages generative AI in software testing to create, execute, and maintain intelligent test suites.

It stands out for:

-

Plain English test creation: No code or scripting required.

-

Real-time adaptability: Tests adjust to application changes automatically.

-

Broad platform coverage: Supports testing across web, mobile, and API endpoints.

-

Self-healing capabilities: Automatically identifies and updates broken tests caused by UI or logic changes.

For QA teams working with communication APIs, testRigor enables full message flow validation—covering everything from API request to user delivery—without the overhead of maintaining complex scripts.

The Future of API Testing: Smarter, Faster, More Resilient

As communication platforms evolve, so must their testing strategies. Generative AI is not just a trend—it's becoming the backbone of modern QA.

Here's what the future looks like:

-

Shorter QA cycles: With automated test generation, teams can release faster.

-

More resilient systems: AI identifies gaps and stress points before users do.

-

Greater test coverage: Every edge case, scenario, and message type is tested automatically.

-

Improved collaboration: Non-technical stakeholders can contribute to QA using plain-language inputs.

In a world where every message counts, leveraging AI for comprehensive, intelligent testing is not just beneficial—it's essential.